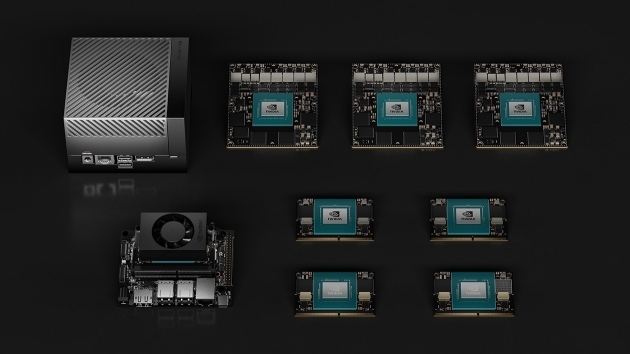

‘NVIDIA Jetson Orin’ refers to the following devices:The NVIDIA Jetson and NVIDIA Jetson Orin devices are embedded Linux devices featuring a GPU-accelerated processor (NVIDIA Tegra) targeted at edge AI applications. You can easily add a USB external microphone or camera - and it’s fully supported by Edge Impulse. You’ll be able to sample raw data, build models, and deploy trained machine learning models directly from the Edge Impulse Studio. In addition to the NVIDIA Jetson and NVIDIA Jetson Orin devices we also recommend that you add a camera and/or a microphone. Most popular USB webcams work fine on the development board out of the box.‘NVIDIA Jetson’ refers to the following devices:

- Jetson AGX Orin Series, Jetson Orin NX Series, Jetson Orin Nano Series

‘Jetson’ refers to all NVIDIA Jetson devices.

- Jetson AGX Xavier Series, Jetson Xavier NX Series, Jetson TX2 Series, Jetson TX1, Jetson Nano

Powering your JetsonAlthough powering your Jetson via USB is technically supported, some users report on forums that they have issues using USB power. If you have any issues such as the board resetting or becoming unresponsive, consider powering via the DC barrel connector.

Don’t forget to change the jumper! See your target’s manual for more information.An added bonus to powering via the DC barrel plug: you can carry out your first boot w/o an external monitor or keyboard.

NVIDIA Jetson Orin

Installing dependencies

Follow NVIDIA’s setup instructions found at NVIDIA Jetson Getting Started Guide depending on your hardware. For example:- NVIDIA Jetson Orin Nano Developer Kit

- NVIDIA Jetson AGX Orin Developer Kit

- NVIDIA Jetson Nano Developer Kit

JetPack

For NVIDIA Jetson Orin:- use SD Card image with JetPack 5.1.2 or

- use SD Card image with JetPack 6.0

Note that you may need to update the UEFI firmware on the device when migrating to JetPack 6.0 from earlier JetPack versions. See NVIDIA’s Initial Setup Guide for Jetson Nano Development Kit for instructions on how to get JetPack 6.0 GA on your device.For NVIDIA Jetson devices use SD Card image with Jetpack 4.6.4. See also JetPack Archive or Jetson Download Center. When finished, you should have a bash prompt via the USB serial port, or using an external monitor and keyboard attached to the Jetson. You will also need to connect your Jetson to the internet via the Ethernet port (there is no WiFi on the Jetson). (After setting up the Jetson the first time via keyboard or the USB serial port, you can SSH in.)

Make sure your ethernet is connected to the Internet

Issue the following command to check:Running the setup script

To set this device up in Edge Impulse, run the following commands (from any folder). When prompted, enter the password you created for the user on your Jetson/Orin in step 1. The entire script takes a few minutes to run (using a fast microSD card). For Jetson:Connecting to Edge Impulse

With all software set up, connect your camera or microphone to your Jetson (see ‘Next steps’ further on this page if you want to connect a different sensor), and run:--clean.

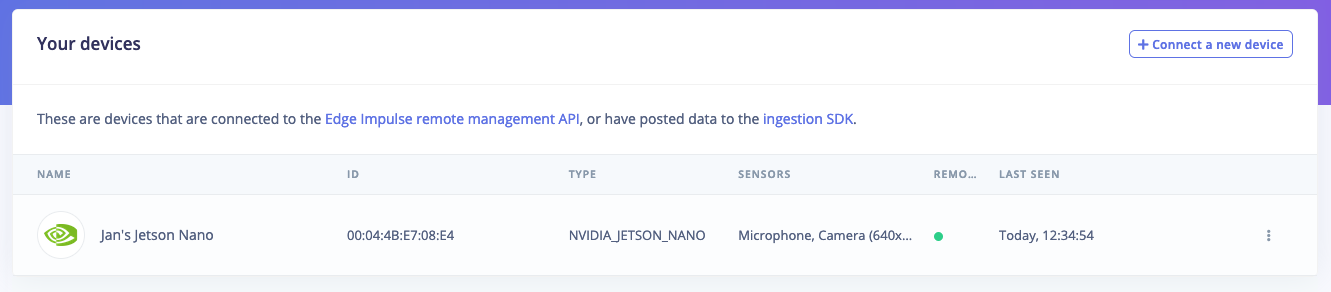

Verifying that your device is connected

That’s all! Your device is now connected to Edge Impulse. To verify this, go to your Edge Impulse project, and click Devices. The device will be listed here.

Device connected to Edge Impulse.

Next steps: building a machine learning model

With everything set up you can now build your first machine learning model with these tutorials:- Keyword spotting

- Sound recognition

- Image classification

- Object detection

- Object detection with centroids (FOMO)

| JetPack version | EIM Deployment | Docker Deployment |

|---|---|---|

| 4.6.4 | NVIDIA Jetson (JetPack 4.6.4) | Docker container (NVIDIA Jetson - JetPack 4.6.4) |

| 5.1.2 | NVIDIA Jetson Orin (JetPack 5.1.2) | Docker container (NVIDIA Jetson Orin - JetPack 5.1.2) |

| 6.0 | NVIDIA Jetson Orin (JetPack 6.0) | Docker container (NVIDIA Jetson Orin - JetPack 6.0) |

Deploying back to device

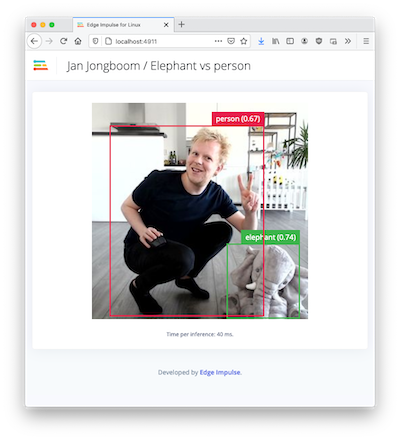

To run your impulse locally, just connect to your Jetson again, and run:Image model?

If you have an image model then you can get a peek of what your device sees by being on the same network as your device, and finding the ‘Want to see a feed of the camera and live classification in your browser’ message in the console. Open the URL in a browser and both the camera feed and the classification are shown:

Live feed with classification results

Troubleshooting

edge-impulse-linux reports “OOM killed!”

Using make -j without specifying job limits can overtax system resources, causing “OOM killed” errors, especially on resource-constrained devices this has been observed on many of our supported Linux based SBCs. Avoid using make -j without limits. If you experience OOM errors, limit concurrent jobs. A safe practice is:edge-impulse-linux reports “[Error: Input buffer contains unsupported image format]”

This is probably caused by a missing dependency on libjpeg. If you run:edge-impulse-linux reports “Failed to start device monitor!”

If you encounter this error, ensure that your entire home directory is owned by you (especially the .config folder):Long warm-up time and under-performance

By default, the Jetson enables a number of aggressive power saving features to disable and slow down hardware that is detected to be not in use. Experience indicates that sometimes the GPU cannot power up fast enough, nor stay on long enough, to enjoy best performance. You can run a script to enable maximum performance on your Jetson. ONLY DO THIS IF YOU ARE POWERING YOUR JETSON FROM A DEDICATED POWER SUPPLY. DO NOT RUN THIS SCRIPT WHILE POWERING YOUR JETSON THROUGH USB. Your Jetson device device can operate in different power modes, a set of power budgets with several predefined configurations CPU and GPU frequencies and number of cores online. To enable maximum performance:- Switch to a mode with the maximum power budget and/or frequencies.

- Then set the clocks to maximum.

- NVIDIA Jetson Orin, Jetson Orin NX and Jetson AGX Orin

- NVIDIA Jetson Xavier NX and Jetson AGX Xavier

For NVIDIA Jetson Xavier NX use mode ID 8Additionally, due to Jetson GPU internal architecture, running small models on it is less efficient than running larger models. E.g. the continuous gesture recognition model runs faster on Jetson CPU than on GPU with TensorRT acceleration. According to our benchmarks, running vision models and larger keyword spotting models on GPU will result in faster inference, while smaller keyword spotting models and gesture recognition models (that also includes simple fully connected NN, that can be used for analyzing other time-series data) will perform better on CPU.